Model Context Protocol

Bridging the Gap Between AI and the Real World

The Model Context Protocol (MCP) has exploded onto the AI scene with remarkable speed. If you're in the tech industry, chances are you've already heard the acronym "MCP" mentioned, seen it discussed on social media, or read about it in industry publications. In a matter of months, this emerging standard has captured the attention of developers, enterprise architects, and AI researchers alike, becoming one of the most discussed technical innovations in the AI ecosystem.

But what exactly is driving this unprecedented level of interest and adoption? At its core, MCP addresses one of the most significant limitations of today's AI models: their isolation from real-time data and external systems. This revolutionary protocol is fundamentally changing how AI systems interact with information and tools, promising to unlock entirely new categories of AI applications.

What is MCP and Why Does it Matter?

At its core, MCP is a standardized way for AI models to request and receive context from the outside world. In practice, this means an AI assistant can seamlessly integrate multiple capabilities; remembering your previous conversations, checking your current account balance, booking appointments on your calendar, or performing real-time research; all within a single, coherent interaction.

Before MCP emerged in late 2024, connecting AI models to external tools required custom integration work for each specific pairing of model and tool. This created what engineers call an "M×T problem" (M models times T tools, each needing its own custom connector), resulting in fragmented architectures and significant development overhead. For a typical enterprise using just 5 AI models and 10 external tools, this meant maintaining 50 separate integration points; a maintenance burden that often made comprehensive AI integration financially unfeasible.

The Origin Story: Born from Necessity

Unlike many technologies introduced through academic research, MCP emerged organically from engineering practices at Anthropic. While building infrastructure to help their AI assistant Claude maintain context, use tools effectively, and remember past interactions, engineers noticed recurring patterns in their architecture.

These patterns - consistent ways of routing memory, invoking tools, and managing message context - eventually crystallized into what we now know as the Model Context Protocol. It became the invisible connective tissue linking AI models with the information they need, when they need it.

What's remarkable is how quickly MCP has gone from an internal engineering practice to one of the most discussed topics in AI development. In just months, it's become a focal point for discussions about AI architecture, with companies racing to implement their own versions and share their approaches.

How MCP Works: Architecture and Flow

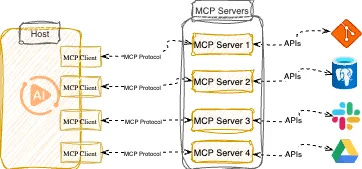

MCP systems typically consist of several key components:

1. MCP Server: The central router that receives context requests and coordinates responses

2. Client Applications: Language models, user-facing apps, or other systems needing contextual information

3. Context Providers: Specialized modules supplying different types of information (user data, tool outputs, etc.)

4. Messaging Layer: Enabling real-time communication between components.

In a typical interaction, an AI model might ask the MCP server for relevant context about a user's situation. The server gathers precisely what's needed from various sources and returns only the essential information, allowing the AI to provide a contextualized, helpful response.

MCP vs. Traditional APIs: A New Paradigm

MCP represents a fundamental shift from how we've traditionally connected systems. As Norah Sakal points out in her analysis, standard APIs handle isolated function calls in a transactional and stateless manner, while MCP operates with persistent context awareness:

While APIs deliver specific pieces of information in isolation, MCP orchestrates context across a dynamic landscape of memory, tools, and interactions—providing a complete picture for more helpful assistance.

The Business Case: Why Organizations Need MCP

For organizations deploying AI, MCP delivers transformative value across multiple dimensions. The protocol enables remarkable integration efficiency by allowing companies to connect AI models to existing tools and data once, eliminating the need to build custom connectors for each specific use case. This approach ensures consistent memory, as AI assistants can now reliably access and maintain important context across different interactions and platforms.

MCP also addresses the critical challenge of real-time accuracy. Rather than relying on potentially outdated training data, AI systems can access up-to-date information at the moment of interaction. This capability leads directly to improved user experiences through more contextually aware and personalized AI interactions. Organizations also gain valuable future-proofing benefits, as they can swap or upgrade AI models without rebuilding their entire integration stack.

By providing a standardized way for AI to access contextual information, MCP helps organizations extract significantly more value from both their AI investments and existing data infrastructure.

MCP Security: Challenges and Solutions

Like any transformative technology in its early stages, MCP introduces important security considerations that are being actively addressed:

Key Security Challenges

The rapid growth of MCP adoption has necessitated careful attention to security considerations. Context leakage represents a significant concern, as persistent contexts may store sensitive information that could be exposed if not properly handled. Similarly, prompt injection attacks present another vulnerability, where malicious inputs could potentially bypass intended behaviours or moderation filters.

Session management emerges as a critical area requiring robust implementation, as improper handling could enable session hijacking or replay attacks that compromise system integrity. The centralized nature of MCP servers creates an additional security dimension, as these components can become high-value targets for attackers seeking to gain broad access to connected systems. Finally, supply chain concerns remain paramount, with organizations needing assurance about the integrity and provenance of MCP server deployments.

OAuth in Plain Language

OAuth is like a hotel key card system for digital services:

1. Without OAuth: You'd need to give your actual home key to every service you use, giving them full access to your house.

2. With OAuth: You give services a specialized "key card" that only works for specific rooms and expires after a set time.

In MCP, OAuth helps control which tools and data sources an AI can access on your behalf, without requiring you to share your actual passwords or credentials with the AI system itself.

Enterprise-Grade Solutions Emerging

The security landscape for MCP is improving dramatically:

1. Authentication Evolution: While Christian Posta noted that "The MCP OAuth specification blurs the distinction between a 'resource server' and an 'authorization server'", the community is actively working to better align MCP with enterprise identity standards.

2. Managed Security Controls: Providers like Cloudflare now offer built-in security features:

- OAuth implementation specifically designed for MCP

- Rate limiting to prevent abuse

- Cost controls for automated access

- Verification mechanisms

Practical Security Recommendations

Organizations implementing MCP should integrate several security practices into their deployment strategies. Strong authentication forms the foundation, with leading implementers using short-lived JSON Web Tokens (JWTs) and implement refresh token rotation. This approach significantly reduces the risk window for compromised credentials.

Equally important is enforcing least privilege principles throughout the MCP stack. Security experts recommend creating purpose-specific service accounts for each MCP context provider, with permissions scoped precisely to the minimum data access required. For example, a customer data provider should only access customer records, not broader organizational data.

Context transparency mechanisms provide another critical security layer. Enterprise implementations now commonly include context logging systems that record which context was injected, when, and at whose request; creating an audit trail that security teams can monitor for anomalies. Tool sandboxing techniques isolate external tools to prevent lateral movement within systems, typically implemented through containerization or function-based isolation. Finally, regular security audits should be scheduled, with specialized focus on MCP-specific vulnerabilities and potential context leakage patterns.

MCP in the Ecosystem: Relation to RAG and A2A

MCP exists within a broader ecosystem of AI integration technologies, forming part of an emerging technical stack rather than standing in isolation. Understanding how these technologies complement each other helps organizations build more comprehensive AI architectures.

Retrieval-Augmented Generation (RAG) and MCP serve different but complementary purposes in the AI stack. While RAG specifically enhances AI outputs by pulling relevant information from knowledge bases during generation, MCP provides a universal framework for all types of contextual exchanges. In practice, many organizations implement RAG as one type of context provider within their broader MCP architecture.

Similarly, the Agent-to-Agent Protocol (A2A) addresses a different dimension of AI integration than MCP. Where MCP manages context exchange between models and external systems, A2A protocols focus on standardizing communication between different AI agents. The complementary nature becomes evident in multi-agent systems, where MCP typically handles the contextual grounding (connecting agents to real-world data) while A2A manages the inter-agent coordination patterns.

A key strength of MCP's architecture lies in its modular nature; organizations can incorporate these and other approaches as components within their MCP implementation, creating a unified integration layer that spans multiple AI technologies without redundant integration efforts.

The Optimistic Future of MCP

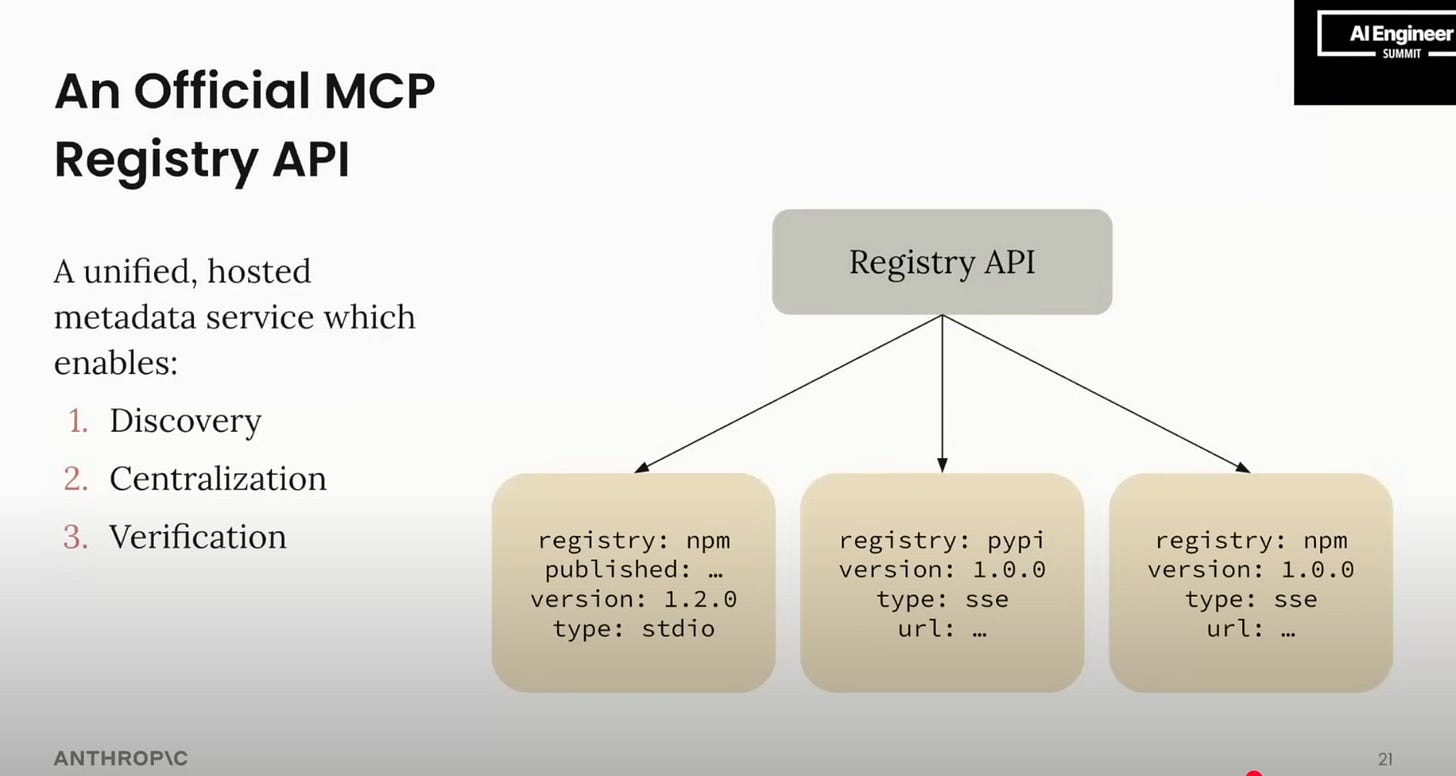

The trajectory of MCP development points toward a rapidly evolving future with transformative potential for AI integration. Among the most anticipated developments is the Official MCP Registry System. This system will offer centralized registries for MCP servers with cryptographic verification mechanisms, version pinning, and trust validation chains; directly addressing the supply chain vulnerabilities highlighted in security research.

Security standardization is accelerating across the ecosystem. What began as proprietary security implementations by early providers like Cloudflare is evolving into a comprehensive security framework with consistent controls and best practices.

The remarkable pace of these developments - compressing what would typically be years of standards evolution into months - stems from unprecedented industry collaboration around MCP.

From Pattern to Protocol

While MCP is not yet a formal industry standard, it is quickly evolving from an internal design pattern into something much broader. Its modular architecture and growing adoption suggest it could become a de facto standard for context management in AI systems. The recent endorsement by Sam Altman and OpenAI; highlighting upcoming MCP support across products like the ChatGPT desktop app and Agents SDK; is a major step in that direction, signalling momentum toward broader standardization.

Conclusion: The Secure Foundation for AI's Future

The Model Context Protocol represents much more than a technical specification; it fundamentally reshapes how AI systems interact with the world around them. By solving the context problem while actively addressing security concerns, MCP has rapidly evolved from experimental concept to enterprise-ready protocol in less than a year.

For organizations building AI capabilities today, implementing MCP could represent the difference between creating isolated, limited AI experiences and developing truly transformative cognitive systems that seamlessly integrate with digital ecosystems. The protocol's rapid security evolution and growing ecosystem of tools suggest that early adopters will gain significant advantages in both implementation efficiency and capability deployment.

As AI becomes increasingly central to business operations and customer experiences, MCP is positioned to be the connective tissue that brings context, intelligence, and real-world awareness together; establishing a secure foundation for the next generation of AI applications.

Are you implementing MCP in your organization? What challenges and opportunities are you encountering? Share your experiences in the comments below.